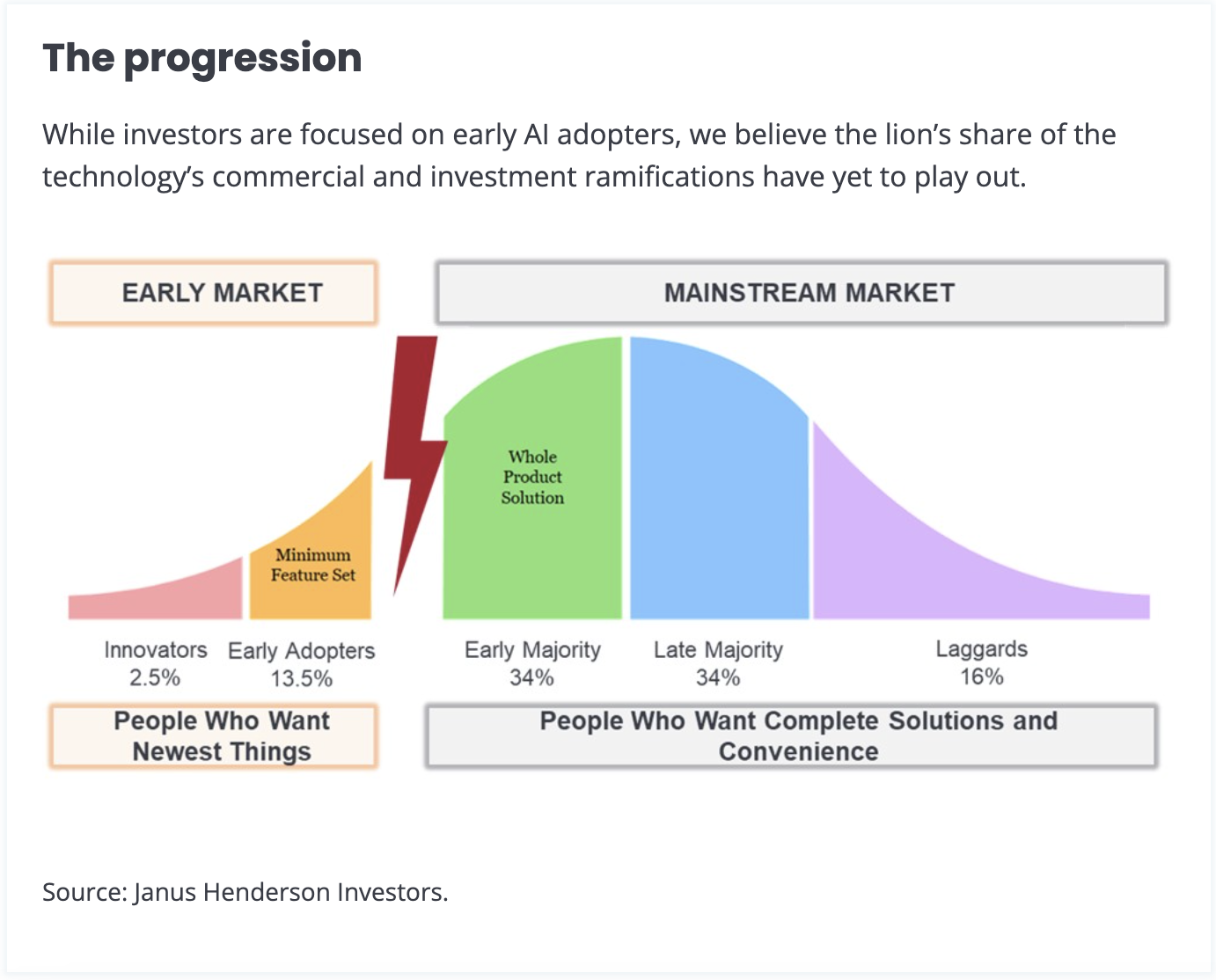

In the roughly 18 months since Open AI released ChatGPT, artificial intelligence (AI) has become an inescapable conversation topic within the investment community, corporate boardrooms, and policy circles. Given this attention, some may ask the question: Is AI really such a big deal? Our answer is a resounding “yes.”

The reasons are manifold. From the highest level, AI, in our view, forever changes how humans and businesses interact with technology. From a macro perspective, it has the potential to be the greatest productivity enhancer since the Industrial Revolution over 200 years ago. Lastly, with respect to the workplace and equities, we expect AI to provide an earnings tailwind as companies deploy it with the aim of streamlining costs, generating revenue and, in many instances, developing new products.

Artificial Intelligence has the potential to be the greatest productivity enhancer since the Industrial Revolution over 200 years ago.

On the shoulders of giants

ChatGPT’s arrival may have seemed like a lightning strike, but the concept of and predecessor technologies for AI were decades in the making. In fact, famed mathematician Alan Turing posed the question of whether computers could eventually think more than 70 years ago.

Over the decades, as processing power grew and algorithms became more sophisticated, computer applications could perform increasingly complex tasks that could indeed be viewed as steps toward thinking. However, programs such as IBM’s Big Blue – which famously defeated chess champion Gary Kasparov – were largely reacting to scenarios they had been programmed to navigate.

The advancements exemplified by ChatGPT are owed to large language models (LLM) and GenAI. These complementary technologies enable powerful algorithms to analyze massive data sets. LLM produces advanced predictive text, while GenAI can create original content based on its own inferences from data sources rather than take predefined cues from human inputs. Chatbots like ChatGPT, however, are just one example of the AI-enabled functionality that we expect to see deployed across the global economy in coming years.

Why AI now?

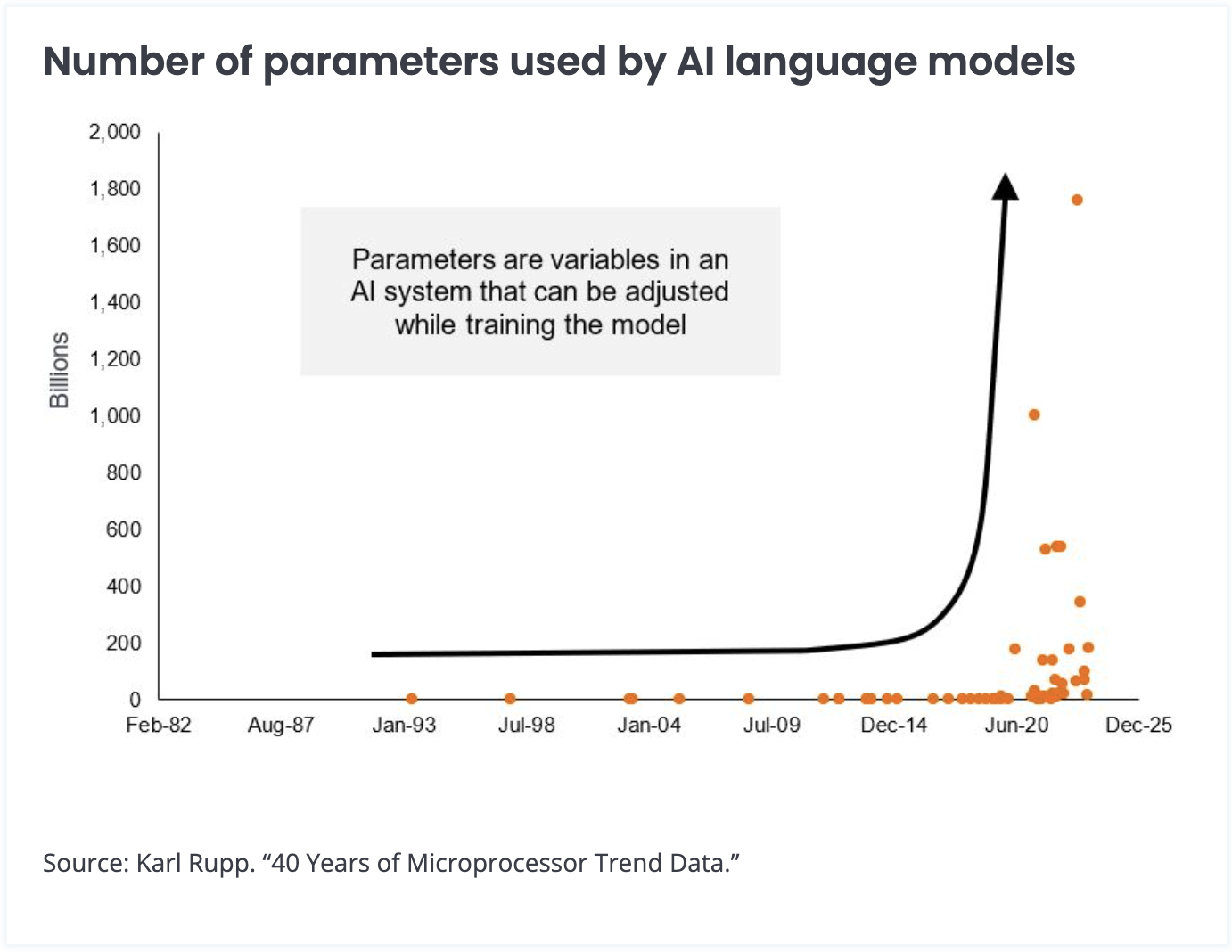

The parameters available to train AI platforms have grown exponentially, along with the capabilities of processors to analyze and make inferences from these massive data sets.

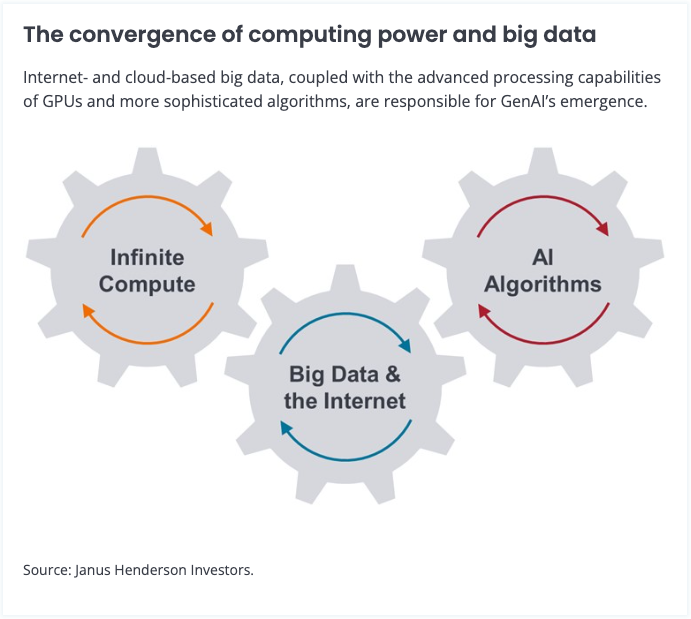

That the promise of AI is only being delivered on now is no accident. It is due to the convergence of several essential inputs: First, an unprecedented amount of available data, thanks in part to the proliferation of the internet and the storage capabilities of the cloud. Second, the ability of cutting-edge graphic processing units (GPUs) and application-specific integrated circuits (ASICs) to systematically absorb this data and maximize the information held within it. Third, advancements in memory chips. And fourth, increasingly sophisticated and specialized software applications that provide platforms upon which AI can be deployed.

Generative AI is the most recent step in this process, and while it has long been anticipated, a true understanding of its capabilities and use cases can only be gauged upon its arrival. This follows the pattern of earlier AI-related advancements, including analytical applications that could find patterns in large data sets, diagnose issues, and deliver predictive analytics.

Next up was machine learning, which could implement the lessons learned from these analytics. Even as we are only beginning to appreciate the power of GenAI, we recognize that the next stage of its evolution is artificial general intelligence (AGI) one day potentially being able to learn and reason on a human level.

Granted, the ability of AI algorithms to scour data, make connections, draw inferences and, when prompted, test hypotheses and come to conclusions, may not be the same as human thinking. But AI’s ability to rapidly leverage large data sets to achieve these objectives make it a powerful complement to workers in a vast array of professions and roles.

In this context, AI can be viewed as both its own secular theme and the culmination of other themes we’ve followed over the years, among them the cloud, the Internet of Things, and mobility. Their convergence, in our view, represents a generational shift in computer technology akin to the transition from mainframes to servers and personal computers and, more recently, the adoption of the internet and mobility.